THE LOTTERY TICKET HYPOTHESIS Paper Reading Notes

Published:

THE LOTTERY TICKET HYPOTHESIS: FINDING SPARSE, TRAINABLE NEURAL NETWORKS

Overview

For a large neural network, it is possible to find a lottery subnetwork that can achieve similar performance to the full network when trained independently.

It’s a standard pruning technique automatically uncovers such trainable subnetworks from fully-connected and convolutional feed-forward networks.

We find that a standard pruning technique automatically uncovers such trainable subnetworks from fully-connected and convolutional feed-forward networks.

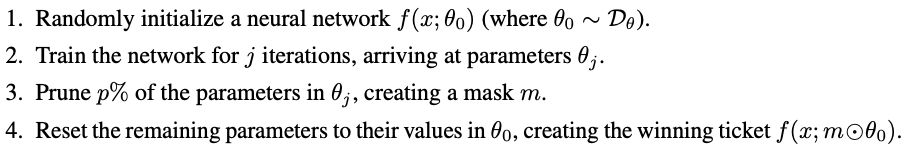

pruning algorithm

Notice: Each unpruned connection’s value is then reset to its initialization from original network before it was trained.

When their parameters are randomly reinitialized, winning tickets no longer match the performance of the original network, offering evidence that these smaller networks do not train effectively unless they are appropriately initialized.

algorithm mentioned in above picture is one-shot.

one-shot: the network is trained once, p% of weights are pruned, and the surviving weights are reset.

iterative pruning: It repeatedly trains, prunes, and resets the network over n rounds; each round prunes $p^{\frac{1}{n}}\%$ of the weights that survive the previous round.

Results show that iterative pruning finds winning tickets that match the accuracy of the original network at smaller sizes than does one-shot pruning.